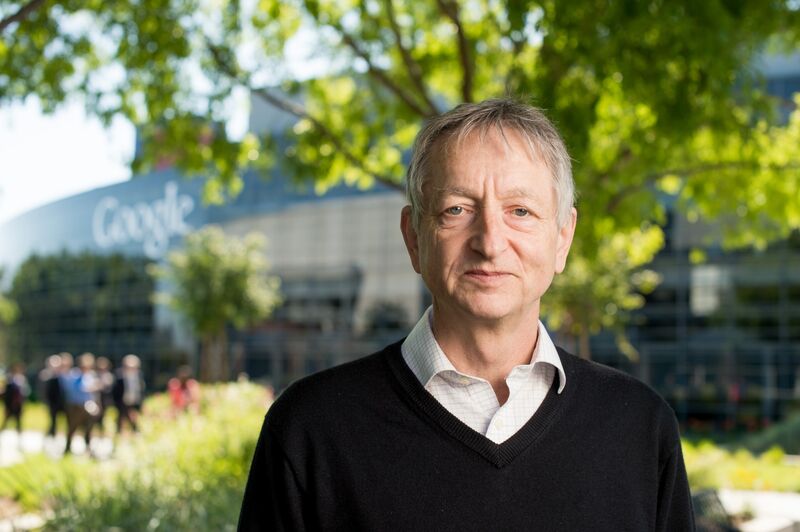

AI’s ‘Godfather’ Should Have Spoken Up Sooner

Hopefully, Google researcher Geoffrey Hinton’s warnings concerning the innovation’s possible injuries will persuade various other researchers to find onward.

It is hard not to be worried when the supposed godfather of expert system, Geoffrey Hinton, states he is leaving Google as well as regrets his life’s work.

Hinton, that made an essential contribution to AI research in the 1970s with his service semantic networks, told a number of information outlets this week that huge innovation business were moving as well quick on releasing AI to the public. Part of the issue was that AI was accomplishing human-like capacities faster than specialists had actually forecast. “That’s scary,” he informed the New York city Times.

Hinton’s issues absolutely make good sense, but they would have been a lot more reliable if they had come several years previously, when other researchers who really did not have retirement to draw on were ringing the exact same alarm bells.

Tellingly, Hinton in a tweet sought to clear up just how the New York Times defined his motivations, fretted that the write-up suggested he had actually left Google to slam it. “Really, I left so that I might discuss the dangers of AI without thinking about how this impacts Google,” he stated. “Google has actually acted extremely sensibly.”

While Hinton’s prestige in the field may have shielded him from blowback, the episode highlights a chronic issue in AI research study: Big innovation business have such a stranglehold on AI research that a lot of their researchers are afraid of broadcasting their worries for fear of hurting their profession prospects.

You can understand why. Meredith Whittaker, a previous research supervisor at Google, had to invest countless dollars on lawyers in 2018 after she assisted organize the walkout of 20,000 Google employees over the company’s contracts with the US Department of Defense. “It’s really, actually scary to face Google,” she informs me. Whittaker, who is now CEO of encrypted messaging app Signal, at some point surrendered from the search giant with a public caution concerning the business’s direction.

2 years later, Google AI researchers Timnit Gebru and also Margaret Mitchell were terminated from the tech giant after they launched a research paper that highlighted the risks of big language models, the technology currently at the facility of concerns over chatbots as well as generative AI. They indicated concerns like racial as well as sex predispositions, inscrutability as well as ecological cost.

Whittaker rankles at the reality that Hinton is now the topic of glowing pictures regarding his contributions to AI after others took a lot higher threats to stand up for what they believed while they were still used at Google. “Individuals with much less power as well as even more marginalized placements were taking real individual risks to name the concerns with AI and of corporations controlling AI,” she says.

Why really did not Hinton speak out earlier? The researcher decreased to react to questions. But he appears to have actually been worried regarding AI for some time, consisting of in the years his associates were upseting for an extra careful technique to the innovation. A 2015 New Yorker post explains him talking with one more AI scientist at a conference concerning how political leaders can make use of AI to terrify individuals. When asked why he was still doing the research study, Hinton responded: “I can offer you the common disagreements, but the reality is that the prospect of exploration is as well pleasant.” It was an intentional resemble of J. Robert Oppenheimer’s renowned summary of the “technically sweet” charm of servicing the atomic bomb.

Hinton says that Google has actually acted “extremely properly” in its release of AI. Yet that’s just partially real. Yes, the company did shut down its face acknowledgment company on concerns of abuse, and also it did keep its effective language design LaMDA under covers for 2 years in order to work on making it safer and also less prejudiced. 1 Google has additionally limited the abilities of Bard, its rival to ChatGPT.

However being liable additionally indicates being transparent as well as liable, and also Google’s history of stifling internal concerns concerning its technology do not motivate self-confidence.

Hinton’s departure and also cautions hopefully will influence various other researchers at large technology business to speak up regarding their problems.

Innovation corporations have actually swallowed up some of the brightest minds in academia thanks to the lure of high incomes, charitable advantages as well as the substantial computing power utilized to educate and experiment on ever-more-powerful AI designs.

Yet there are signs some researchers go to the very least thinking about being a lot more singing. “I frequently consider when I would certainly stop [AI startup] Anthropic or leave AI totally,” tweeted Catherine Olsson, a technological staff member at AI safety and security business Anthropic on Monday, in response to Hinton’s remarks. “I can already inform this step will affect me.”

Several AI researchers seem to have a fatalistic approval that bit can be done to stem the tide of generative AI, since it has actually been let loose to the globe. As Anthropic co-founder Jared Kaplan informed me in an interview released Tuesday, “the pet cat runs out the bag.”

Yet if today’s researchers want to speak up currently, while it matters, and not right prior to they retire, we are all likely to benefit.